COPY FROM

COPY ... FROM is a statement that allows loading data from files on the filesystem and importing them into SQreamDB tables. This is the recommended way for bulk loading CSV files into SQreamDB. In general, COPY moves data between filesystem files and SQreamDB tables.

Note

Learn how to migrate from CSV files in the CSV guide

To copy data from a table to a file, see COPY TO.

To load Parquet or ORC files, see CREATE FOREIGN TABLE

Permissions

The role must have the INSERT permission to the destination table.

Syntax

COPY [schema name.]table_name [ (<column_name>) [, ...] ]

FROM WRAPPER fdw_name

OPTIONS

(

[ copy_from_option [, ...] ]

)

schema_name ::= identifer

table_name ::= identifier

copy_from_option ::=

LOCATION = { filename | S3 URI | HDFS URI }

| QUOTE = {'C' | E'\ooo')

| OFFSET = { offset }

| LIMIT = { limit }

| DELIMITER = '{ delimiter }'

| RECORD_DELIMITER = '{ record delimiter }'

| ERROR_LOG = '{ local filepath }'

| REJECTED_DATA = '{ local filepath }'

| CONTINUE_ON_ERROR = { true | false }

| ERROR_COUNT = '{ error count }'

| DATETIME_FORMAT = '{ parser format }'

| AWS_ID = '{ AWS ID }'

| AWS_SECRET = '{ AWS Secret }'

| DELETE_SOURCE_ON_SUCCESS = { true | false }

offset ::= positive integer

limit ::= positive integer

delimiter ::= string

record delimiter ::= string

error count ::= integer

parser_format ::= see supported parser table below

AWS ID ::= string

AWS Secret ::= string

Note

Some options are applicable to CSVs only.

These include:

OFFSET, LIMIT, DELIMITER, RECORD_DELIMITER, REJECTED_DATA, DATETIME_FORMAT

Elements

Parameter |

Default value |

Value range |

Description |

|---|---|---|---|

|

None |

Table to copy data into |

|

|

|

Specifies an alternative quote character. The quote character must be a single, 1-byte printable ASCII character, and the equivalent octal syntax of the copy command can be used. The quote character cannot be contained in the field delimiter, the record delimiter, or the null marker. |

|

|

|

The name of the Foreign Data Wrapper to use |

|

|

None |

A path on the local filesystem, S3, or HDFS URI. For example, |

|

|

|

>1, but no more than the number of lines in the first file |

The row number to start with. The first row is |

|

unlimited |

1 to 2147483647. |

When specified, tells SQream DB to stop loading after the specified number of rows. Unlimited if unset. |

|

|

Almost any ASCII character, See field delimiters section below |

Specifies the field terminator - the character (or characters) that separates fields or columns within each row of the file. |

|

|

|

Specifies the row terminator - the character that separates lines or rows, also known as a new line separator. |

|

No error log |

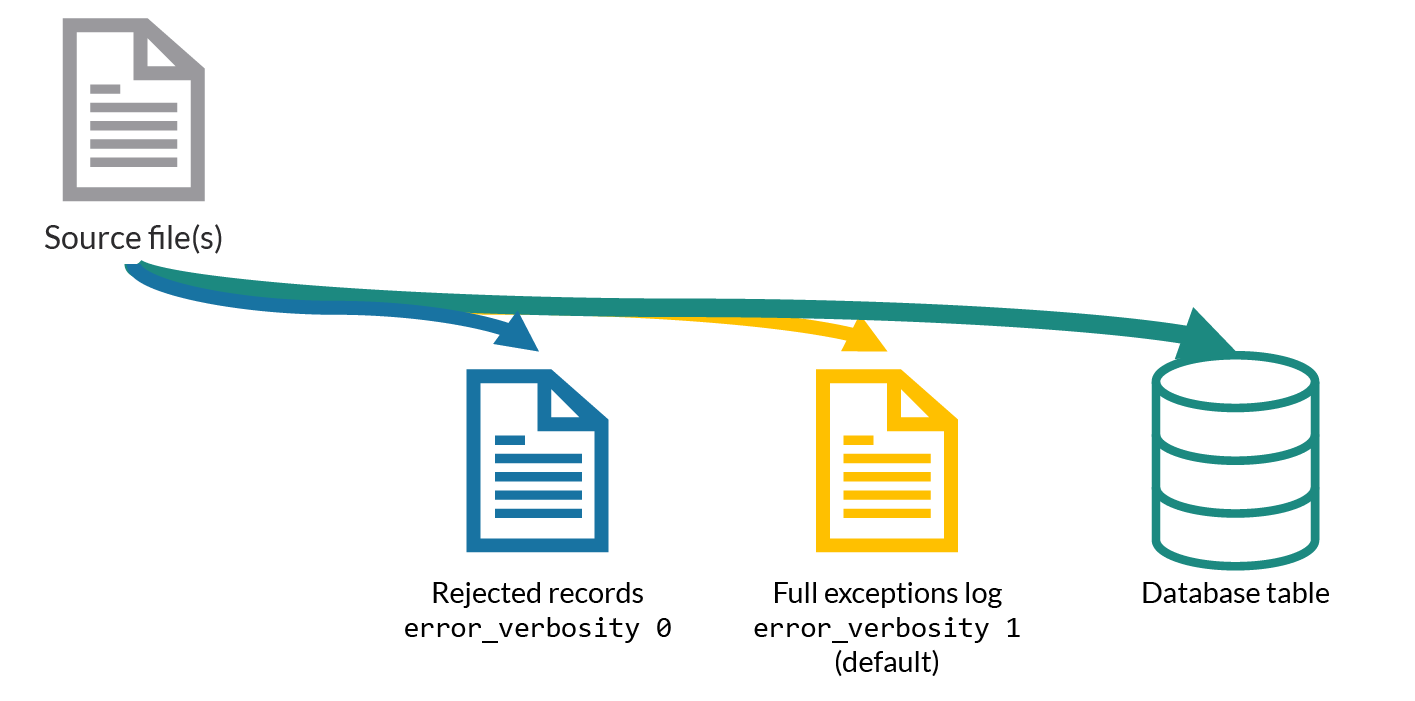

When used, the

|

|

|

Inactive |

When used, the

|

|

|

|

|

Specifies if errors should be ignored or skipped. When set to This parameter should be set together with |

|

|

1 to 2147483647 |

Specifies the threshold for the maximum number of faulty records that will be ignored. This setting must be used in conjunction with |

|

ISO8601 for all columns |

Allows specifying a non-default date formats for specific columns |

|

|

None |

Specifies the authentication details for secured S3 buckets |

|

|

|

|

When set to |

Supported Date Formats

Name |

Pattern |

Examples |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Pattern |

Description |

|---|---|

|

four digit year representation (0000-9999) |

|

two digit month representation (01-12) |

|

two digit day of month representation (01-31) |

|

short month representation (Jan-Dec) |

|

short day of week representation (Sun-Sat). |

|

two digit 24 hour representation (00-23) |

|

two digit 12 hour representation (00-12) |

|

uppercase AM/PM representation |

|

two digit minute representation (00-59) |

|

two digit seconds representation (00-59) |

|

3 digits fraction representation for milliseconds (000-999) |

Note

These date patterns are not the same as date parts used in the DATEPART function.

Supported Field Delimiters

Field delimiters can be one or more characters.

Customizing Quotations Using Alternative Characters

Syntax:

COPY t FROM wrapper csv_fdw OPTIONS (location = '/tmp/source_file.csv', quote='@');

COPY t TO wrapper csv_fdw OPTIONS (location = '/tmp/destination_file.csv', quote='@');

Example:

The following is an example of line taken from a CSV when customizing quotations using a character:

Pepsi-"Cola",@Coca-"Cola"@,Sprite,Fanta

Customizing Quotations Using ASCII Character Codes

Syntax:

copy t from wrapper csv_fdw options (location = '/tmp/source_file.csv', quote=E'\064');

copy t to wrapper csv_fdw options (location = '/tmp/destination_file.csv', quote=E'\064');

Example:

The following is an example of line taken from a CSV when customizing quotations using an ASCII character code:

Pepsi-"Cola",@Coca-"Cola"@,Sprite,Fanta

Multi-Character Delimiters

SQreamDB supports multi-character field delimiters, sometimes found in non-standard files.

A multi-character delimiter can be specified. For example, DELIMITER '%%', DELIMITER '{~}', etc.

Printable Characters

All printable ASCII character (except for N) can be used as a delimiter without special syntax. The default CSV field delimiter is a comma (,).

The following table shows the supported printable ASCII characters:

Character |

Description |

ASCII |

Octal |

Hex |

Binary |

HTML Code |

HTML Name |

(Space) |

Space |

32 |

40 |

20 |

100000 |

|

|

! |

Exclamation Mark |

33 |

41 |

21 |

100001 |

! |

! |

# |

Hash or Number |

35 |

43 |

23 |

100011 |

# |

# |

$ |

Dollar Sign |

36 |

44 |

24 |

100100 |

$ |

$ |

% |

Percentage |

37 |

45 |

25 |

100101 |

% |

% |

& |

Ampersand |

38 |

46 |

26 |

100110 |

& |

& |

( |

Left Parenthesis |

40 |

50 |

28 |

101000 |

( |

( |

) |

Right Parenthesis |

41 |

51 |

29 |

101001 |

) |

) |

* |

Asterisk |

42 |

52 |

2A |

101010 |

* |

* |

+ |

Plus Sign |

43 |

53 |

2B |

101011 |

+ |

+ |

, |

Comma |

44 |

54 |

2C |

101100 |

, |

, |

/ |

Slash |

47 |

57 |

2F |

101111 |

/ |

/ |

; |

Semicolon |

59 |

73 |

3B |

111011 |

; |

; |

< |

Less Than |

60 |

74 |

3C |

111100 |

< |

< |

= |

Equals Sign |

61 |

75 |

3D |

111101 |

= |

= |

> |

Greater Than |

62 |

76 |

3E |

111110 |

> |

> |

? |

Question Mark |

63 |

77 |

3F |

111111 |

? |

? |

@ |

At Sign |

64 |

100 |

40 |

1000000 |

@ |

@ |

[ |

Left Square Bracket |

91 |

133 |

5B |

1011011 |

[ |

[ |

\ |

Backslash |

92 |

134 |

5C |

1011100 |

\ |

\ |

] |

Right Square Bracket |

93 |

135 |

5D |

1011101 |

] |

] |

^ |

Caret or Circumflex |

94 |

136 |

5E |

1011110 |

^ |

&hat; |

_ |

Underscore |

95 |

137 |

5F |

1011111 |

_ |

_ |

Grave Accent |

96 |

140 |

60 |

1100000 |

` |

` |

|

{ |

Left Curly Bracket |

123 |

173 |

7B |

1111011 |

{ |

{ |

| |

Vertical Bar |

124 |

174 |

7C |

1111100 |

| |

| |

} |

Right Curly Bracket |

125 |

175 |

7D |

1111101 |

} |

} |

~ |

Tilde |

126 |

176 |

7E |

1111110 |

~ |

˜ |

58 |

: |

Colon |

72 |

3A |

111010 |

: |

: |

65 |

A |

A |

101 |

41 |

1000001 |

A |

|

66 |

B |

B |

102 |

42 |

1000010 |

B |

|

67 |

C |

C |

103 |

43 |

1000011 |

C |

|

68 |

D |

D |

104 |

44 |

1000100 |

D |

|

69 |

E |

E |

105 |

45 |

1000101 |

E |

|

70 |

F |

F |

106 |

46 |

1000110 |

F |

|

71 |

G |

G |

107 |

47 |

1000111 |

G |

|

72 |

H |

H |

110 |

48 |

1001000 |

H |

|

73 |

I |

I |

111 |

49 |

1001001 |

I |

|

74 |

J |

J |

112 |

4A |

1001010 |

J |

|

75 |

K |

K |

113 |

4B |

1001011 |

K |

|

76 |

L |

L |

114 |

4C |

1001100 |

L |

|

77 |

M |

M |

115 |

4D |

1001101 |

M |

|

79 |

O |

O |

117 |

4F |

1001111 |

O |

|

80 |

P |

P |

120 |

50 |

1010000 |

P |

|

81 |

Q |

Q |

121 |

51 |

1010001 |

Q |

|

82 |

R |

R |

122 |

52 |

1010010 |

R |

|

83 |

S |

S |

123 |

53 |

1010011 |

S |

|

84 |

T |

T |

124 |

54 |

1010100 |

T |

|

85 |

U |

U |

125 |

55 |

1010101 |

U |

|

86 |

V |

V |

126 |

56 |

1010110 |

V |

|

87 |

W |

W |

127 |

57 |

1010111 |

W |

|

88 |

X |

X |

130 |

58 |

1011000 |

X |

|

89 |

Y |

Y |

131 |

59 |

1011001 |

Y |

|

90 |

Z |

Z |

132 |

5A |

1011010 |

Z |

|

92 |

\ |

Backslash |

134 |

5C |

01011100 |

\ |

|

97 |

a |

a |

141 |

61 |

1100001 |

a |

|

98 |

b |

b |

142 |

62 |

1100010 |

b |

|

99 |

c |

c |

143 |

63 |

1100011 |

c |

|

100 |

d |

d |

144 |

64 |

1100100 |

d |

|

101 |

e |

e |

145 |

65 |

1100101 |

e |

|

102 |

f |

f |

146 |

66 |

1100110 |

f |

|

103 |

g |

g |

147 |

67 |

1100111 |

g |

|

104 |

h |

h |

150 |

68 |

1101000 |

h |

|

105 |

i |

i |

151 |

69 |

1101001 |

i |

|

106 |

j |

j |

152 |

6A |

1101010 |

j |

|

107 |

k |

k |

153 |

6B |

1101011 |

k |

|

108 |

l |

l |

154 |

6C |

1101100 |

l |

|

109 |

m |

m |

155 |

6D |

1101101 |

m |

|

110 |

n |

n |

156 |

6E |

1101110 |

n |

|

111 |

o |

o |

157 |

6F |

1101111 |

o |

|

112 |

p |

p |

160 |

70 |

1110000 |

p |

|

113 |

q |

q |

161 |

71 |

1110001 |

q |

|

114 |

r |

r |

162 |

72 |

1110010 |

r |

|

115 |

s |

s |

163 |

73 |

1110011 |

s |

|

116 |

t |

t |

164 |

74 |

1110100 |

t |

|

117 |

u |

u |

165 |

75 |

1110101 |

u |

|

118 |

v |

v |

166 |

76 |

1110110 |

v |

|

119 |

w |

w |

167 |

77 |

1110111 |

w |

|

120 |

x |

x |

170 |

78 |

1111000 |

x |

|

121 |

y |

y |

171 |

79 |

1111001 |

y |

|

122 |

z |

z |

172 |

7A |

1111010 |

z |

Non-Printable Characters

A tab can be specified by escaping it, for example \t. Other non-printable characters can be specified using their octal representations, by using the E'\000' format, where 000 is the octal value of the character.

For example, ASCII character 15, known as “shift in”, can be specified using E'\017'.

The following table shows the supported non-printable ASCII characters:

Character |

Description |

Octal |

ASCII |

Hex |

Binary |

HTML Code |

HTML Name |

NUL |

Null |

0 |

0 |

0 |

0 |

� |

|

SOH |

Start of Heading |

1 |

1 |

1 |

1 |

|

|

STX |

Start of Text |

2 |

2 |

2 |

10 |

|

|

ETX |

End of Text |

3 |

3 |

3 |

11 |

|

|

EOT |

End of Transmission |

4 |

4 |

4 |

100 |

|

|

ENQ |

Enquiry |

5 |

5 |

5 |

101 |

|

|

ACK |

Acknowledge |

6 |

6 |

6 |

110 |

|

|

BEL |

Bell |

7 |

7 |

7 |

111 |

|

|

BS |

Backspace |

10 |

8 |

8 |

1000 |

|

|

HT |

Horizontal Tab |

11 |

9 |

9 |

1001 |

|

|

VT |

Vertical Tab |

13 |

11 |

0B |

1011 |

|

|

FF |

NP Form Feed, New Page |

14 |

12 |

0C |

1100 |

|

|

SO |

Shift Out |

16 |

14 |

0E |

1110 |

|

|

SI |

Shift In |

17 |

15 |

0F |

1111 |

|

|

DLE |

Data Link Escape |

20 |

16 |

10 |

10000 |

|

|

DC1 |

Device Control 1 |

21 |

17 |

11 |

10001 |

|

|

DC2 |

Device Control 2 |

22 |

18 |

12 |

10010 |

|

|

DC3 |

Device Control 3 |

23 |

19 |

13 |

10011 |

|

|

DC4 |

Device Control 4 |

24 |

20 |

14 |

10100 |

|

|

NAK |

Negative Acknowledge |

25 |

21 |

15 |

10101 |

|

|

SYN |

Synchronous Idle |

26 |

22 |

16 |

10110 |

|

|

ETB |

End of Transmission Block |

27 |

23 |

17 |

10111 |

|

|

CAN |

Cancel |

30 |

24 |

18 |

11000 |

|

|

EM |

End of Medium |

31 |

25 |

19 |

11001 |

|

|

SUB |

Substitute |

32 |

26 |

1A |

11010 |

|

|

ESC |

Escape |

33 |

27 |

1B |

11011 |

|

|

FS |

File Separator |

34 |

28 |

1C |

11100 |

|

|

GS |

Group Separator |

35 |

29 |

1D |

11101 |

|

|

RS |

Record Separator |

36 |

30 |

1E |

11110 |

|

|

US |

Unit Separator |

37 |

31 |

1F |

11111 |

|

|

DEL |

Delete |

177 |

127 |

7F |

1111111 |

|

Unsupported Field Delimiters

The following ASCII field delimiters (octal range 001 - 176) are not supported:

The following table shows the unsupported ASCII field delimiters:

ASCII |

Character |

Description |

Octal |

Hex |

Binary |

HTML Code |

HTML Name |

10 |

LF |

NL Line Feed, New Line |

12 |

0A |

1010 |

|

|

13 |

CR |

Carriage Return |

15 |

0D |

1101 |

|

|

34 |

“ |

Double Quote |

42 |

22 |

100010 |

" |

" |

45 |

- |

Minus Sign |

55 |

2D |

101101 |

- |

− |

46 |

. |

Period |

56 |

2E |

101110 |

. |

. |

48 |

0 |

Zero |

60 |

30 |

110000 |

0 |

|

49 |

1 |

Number One |

61 |

31 |

110001 |

1 |

|

50 |

2 |

Number Two |

62 |

32 |

110010 |

2 |

|

51 |

3 |

Number Three |

63 |

33 |

110011 |

3 |

|

52 |

4 |

Number Four |

64 |

34 |

110100 |

4 |

|

53 |

5 |

Number Five |

65 |

35 |

110101 |

5 |

|

54 |

6 |

Number Six |

66 |

36 |

110110 |

6 |

|

55 |

7 |

Number Seven |

67 |

37 |

110111 |

7 |

|

56 |

8 |

Number Eight |

70 |

38 |

111000 |

8 |

|

57 |

9 |

Number Nine |

71 |

39 |

111001 |

9 |

|

58 |

: |

Colon |

72 |

3A |

111010 |

: |

: |

92 |

\ |

Backslash |

134 |

5C |

01011100 |

\ |

|

78 |

N |

N |

116 |

4E |

1001110 |

N |

Capturing Rejected Rows

Prior to the column process and storage, the COPY command parses the data.

Whenever the data can’t be parsed because it is improperly formatted or doesn’t match the data structure, the entire record (or row) will be rejected.

When ERROR_LOG is not used, the COPY command will stop and roll back the transaction upon the first error.

CSV Support

By default, SQream DB’s CSV parser can handle RFC 4180 standard CSVs , but can also be modified to support non-standard CSVs (with multi-character delimiters, unquoted fields, etc).

All CSV files should be prepared according to these recommendations:

Files are UTF-8 or ASCII encoded

Field delimiter is an ASCII character or characters

Record delimiter, also known as a new line separator, is a Unix-style newline (

\n), DOS-style newline (\r\n), or Mac style newline (\r).Fields are optionally enclosed by double-quotes, or mandatory quoted if they contain one of the following characters:

The record delimiter or field delimiter

A double quote character

A newline

If a field is quoted, any double quote that appears must be double-quoted (similar to the string literals quoting rules. For example, to encode

What are "birds"?, the field should appear as"What are ""birds""?".Other modes of escaping are not supported (e.g.

1,"What are \"birds\"?"is not a valid way of escaping CSV values).

Marking Null Markers

NULL values can be marked in two ways in the CSV:

An explicit null marker. For example,

col1,\N,col3An empty field delimited by the field delimiter. For example,

col1,,col3

Note

If a text field is quoted but contains no content ("") it is considered an empty text field. It is not considered NULL.

Examples

Loading a Standard CSV File

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.csv');

Skipping Faulty Rows

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.csv', continue_on_error = true);

Skipping a Maximum of 100 Faulty Rows

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.csv', continue_on_error = true, error_count = 100);

Loading a Pipe Separated Value (PSV) File

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.psv', delimiter = '|');

Loading a Tab Separated Value (TSV) File

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.tsv', delimiter = '\t');

Loading an ORC File

COPY table_name FROM WRAPPER orc_fdw OPTIONS (location = '/tmp/file.orc');

Loading a Parquet File

COPY table_name FROM WRAPPER parquet_fdw OPTIONS (location = '/tmp/file.parquet');

Loading a JSON File

COPY t FROM WRAPPER json_fdw OPTIONS (location = 'somefile.json');

Loading an AVRO File

COPY t FROM WRAPPER fdw_name OPTIONS ([ copy_from_option [, ...] ]);

Loading a Text File with Non-Printable Delimiters

In the file below, the separator is DC1, which is represented by ASCII 17 decimal or 021 octal.

COPY table_name FROM WRAPPER psv_fdw OPTIONS (location = '/tmp/file.txt', delimiter = E'\021');

Loading a Text File with Multi-Character Delimiters

In the file below, the separator is ^|.

COPY table_name FROM WRAPPER psv_fdw OPTIONS (location = '/tmp/file.txt', delimiter = '^|');

In the file below, the separator is '|. The quote character has to be repeated, as per the literal quoting rules.

COPY table_name FROM WRAPPER psv_fdw OPTIONS (location = '/tmp/file.txt', delimiter = ''''|');

Loading Files with a Header Row

Use OFFSET to skip rows.

Note

When loading multiple files (e.g. with wildcards), this setting affects each file separately.

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.psv', delimiter = '|', offset = 2);

Loading Files Using DELETE_SOURCE_ON_SUCCESS

-- Single file:

COPY t FROM WRAPPER json_fdw OPTIONS (location = '/tmp/wrappers/t.json', DELETE_SOURCE_ON_SUCCESS = true);

-- Multiple files:

COPY t FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/wrappers/group*.csv', DELETE_SOURCE_ON_SUCCESS = true);

Loading Files Formatted for Windows (\r\n)

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/file.psv', delimiter = '\r\n');

Loading a File from a Public S3 Bucket

Note

The bucket must be publicly available and objects can be listed

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = 's3://sqream-demo-data/file.csv', delimiter = '\r\n', offset = 2);

Loading a File From a Google Cloud Platform Bucket

To access a Google Cloud Platform (GCP) Bucket it is required that your environment be authorized.

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = 'gs://<gcs path>/<gcs_bucket>/*');

Loading a File From Azure

To access Azure it is required that your environment be authorized.

COPY table_name FROM WRAPPER csv_fdw OPTIONS(location = 'azure://sqreamrole.core.windows.net/sqream-demo-data/file.csv');

Loading Files from an Authenticated S3 Bucket

COPY table_name FROM WRAPPER psv_fdw OPTIONS (location = 's3://secret-bucket/*.csv', DELIMITER = '\r\n', OFFSET = 2, AWS_ID = '12345678', AWS_SECRET = 'super_secretive_secret');

Saving Rejected Rows to a File

Note

When loading multiple files (e.g. with wildcards), this error threshold is for the entire transaction.

COPY table_name FROM WRAPPER csv_fdw

OPTIONS

(

location = '/tmp/file.csv'

,continue_on_error = true

,error_log = '/temp/load_error.log'

);

COPY table_name FROM WRAPPER csv_fdw

OPTIONS

(

location = '/tmp/file.psv'

,delimiter '|'

,error_log = '/temp/load_error.log' -- Save error log

,rejected_data = '/temp/load_rejected.log' -- Only save rejected rows

,limit = 100 -- Only load 100 rows

,error_count = 5 -- Stop the load if 5 errors reached

);

Loading CSV Files from a Set of Directories

COPY table_name FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/2019_08_*/*.csv');

Rearranging Destination Columns

When the source of the files does not match the table structure, tell the COPY command what the order of columns should be

COPY table_name (fifth, first, third) FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/*.csv');

Note

Any column not specified will revert to its default value or NULL value if nullable

Loading Non-Standard Dates

If your files contain dates in a format other than ISO8601, you can specify a parsing format to convert them during the import process. This ensures the dates are stored internally as ISO8601 within the database.

In this example, date_col1 and date_col2 in the table are non-standard. date_col3 is mentioned explicitly, but can be left out. Any column that is not specified is assumed to be ISO8601.

COPY my_table (date_col1, date_col2, date_col3) FROM WRAPPER csv_fdw OPTIONS (location = '/tmp/my_data.csv', offset = 2, datetime_format 'DMY');

Loading Specific Columns

Loading specific columns using the COPY FROM command:

Does not support CSV files

Requires that the target table columns be nullable

COPY

new_nba (name, salary)

FROM

WRAPPER

parquet_fdw

OPTIONS

(

LOCATION = '/tmp/nba.parquet'

);